What is Kubernetes and a Kubernetes Cluster?

Kubernetes is an open-source container orchestration tool originally developed by Google for managing microservices or containerized applications across a distributed cluster of nodes. It is widely thought that “Kubernetes is key” to cloud-native application strategies.

Kubernetes (K8s) runs over several nodes, and the collection of nodes is called a cluster. K8s clusters allow application developers to orchestrate and monitor containers across multiple physical, virtual, or cloud servers. Containers are the ready-to-run software package with everything needed to run an application on a node.

Let’s quickly take a closer look at Kubernetes components!

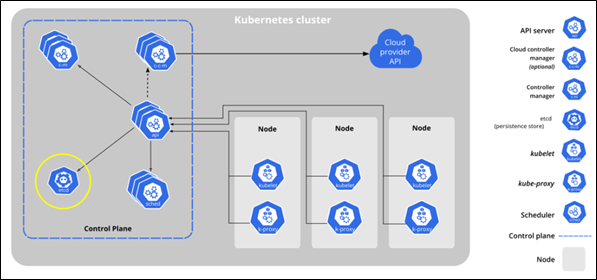

Kubernetes architecture components include the following.

- Control plane (master components)

- Kubernetes API server

- Kubernetes scheduler

- Kubernetes controller manager

- etcd

- Nodes (worker components)

- container runtime engine or docker

- Kubelet service

- Kubernetes proxy service

Based on these two main sets of components, we can categorize the data for a Kubernetes cluster as:

- Cluster resource data – etcd and other relevant configuration data such as certificates to restore the control plane

- Cloud configuration data – node pool config, load balancer info, add-ons etc.

- Persistent Volume Data – for the containerized applications running on nodes in the cluster

Why Should You Backup Kubernetes Clusters?

Initially, Kubernetes was mostly used for stateless applications with short lifespans. These were easy to develop, deploy, and retire, making them ideal for web apps or test environments.

In such cases, backing up the etcd key-value store—which resides in the control plane—was often enough to restore applications after a failure.

Today, organizations are running stateful containerized applications that manage business-critical data. This shift demands more robust backup strategies to ensure high availability and business continuity.

As access to critical data increases, minimizing downtime becomes essential. Reliable Kubernetes backup and recovery is no longer optional—it’s a requirement.

How is Traditional Backup Different from Kubernetes Cluster Backup?

Admin teams often take application-aware or crash-consistent backups of hosts or servers independently to prevent data loss or disaster.

However, using this traditional approach for Kubernetes means you’ll need separate backup mechanisms for:

The etcd database

Application data on primary storage

Persistent volume (PV) information

This might work for a single cluster, but managing backups across multiple clusters quickly becomes complex and unsustainable.

Kubernetes is inherently distributed, with applications running in containers across multiple nodes. Effective backup solutions must understand:

What constitutes a Kubernetes app

Its underlying storage

Resources like pods, nodes, and namespaces

Kubernetes also includes abstraction layers such as:

Persistent Volumes (PVs) for storage

Secrets, service accounts, and deployments for managing access and communication between containers

Traditional backup tools lack Kubernetes awareness. They don’t recognize Kubernetes APIs or its components, so block-level snapshots can lead to incomplete restores or system crashes.

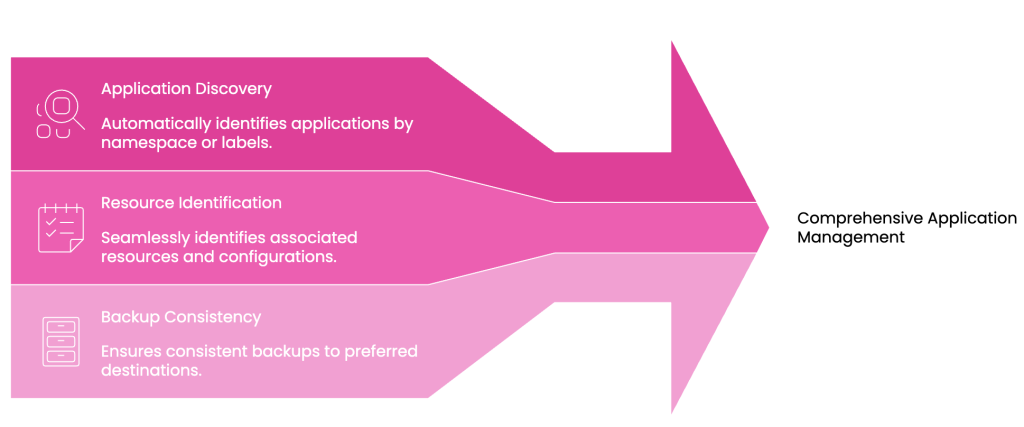

To avoid this, a Kubernetes-native data protection solution must:

Automatically discover applications by namespace or labels

Identify associated resources, volumes, and configurations

Enable consistent backups to your preferred destination

3 Ways To Protect Your Kubernetes Applications

Now that we are educated with why the Kubernetes cluster backup is important, let us understand the different methods available which help solve the challenges of Kubernetes data protection.

1) etcd and Kubernetes

Etcd is the key value datastore of the Kubernetes cluster. It is critical and a core component of the Kubernetes control plane. It stores cluster data including all Kubernetes objects such as cluster-scoped resources, deployment, and pod information. The etcd server is the only stateful component of the Kubernetes cluster and helps cloud-native applications to maintain more consistent uptime and remain working.

Since Kubernetes stores all API objects and settings on the etcd server, if your etcd data is corrupted or lost, Kubernetes cluster will not be able to continue to run. Hence backing up this storage is enough to restore the Kubernetes cluster’s state completely.

How to use etcd Backup and Restore

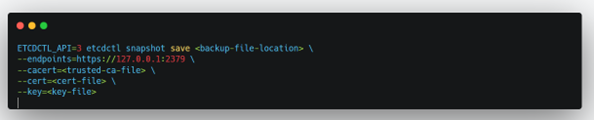

Etcd is open source and its available on GitHub and backed by the Cloud Native Computing Foundation. You can deploy the etcd database as a pod in the primary node or also deploy it externally to enable resiliency and security. In order to interact with etcd, for backup and restore purposes all commands are executed on control plane node directly using “etcdctl” command line utility. etcdctl has a snapshot option which makes it relatively easy to take a backup of the cluster.

The sample skeleton of the Backup and Restore command is as below, where v3 API (ETCDCTL_API=3) of etcdctl will be used while running the snapshot command

Note: Provide a backup-file-location in a different domain so that if the control plane is destroyed your backup data location is safe. E.g. use AWS S3 (or similar) for this.

The backup of the etcd database is only possible if you have a self-created cluster with access to the etcd database. In case where the cluster is configured by other 3rd party vendor it becomes difficult. Also restoring an etcd backup is not the easiest process, given there are a few manual steps involved and you may need to restart etcd pods in the process.

2) Velero – Open Source Tool

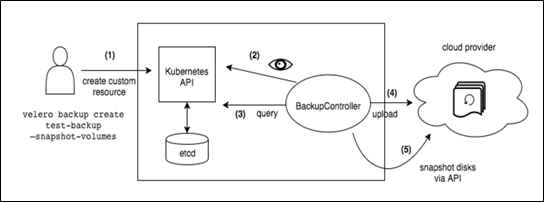

Velero is popular open-source tool to backup, restore, migrate, and replicate your Kubernetes cluster resources and persistent volumes to same or another cluster. It compresses and stores backups of Kubernetes objects to object storage, taking snapshots of Persistent Volumes on the cloud provider’s block storage and then restoring clusters and Persistent Volumes to their previous states during disaster recovery.

Velero address various Kubernetes data protection use cases, including but not limited to:

Taking backups of your cluster to allow for restore in case of infrastructure loss/corruption.

Replication of production applications to dev and test clusters

Unlike direct etcd backup, Velero is compliant with a generic approach, it accesses etcd via Kubernetes API server and backs up resources as Kubernetes objects.

Note: The “BackupController” begins the backup process and collects the data to back up by querying the API server for resources. It is also responsible for making calls to the object storage service.

How to Install and Use Velero?

Velero essentially is comprised of two components:

- A server that runs as a set of resources with your Kubernetes cluster

- A command-line client that runs locally.

Velero can be installed in 2 ways:

- Follow the Velero installation guide to install the Velero CLI on a machine that has access to the Kubernetes cluster

- Install the Veleros Helm chart from VMware Tanzu.

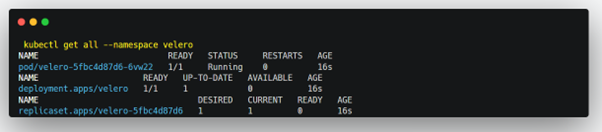

After the Installation is complete! Wait for the Velero resources to come up:

Velero stores backups of Kubernetes objects in S3 compatible storage. For example, you can use Amazon S3. Follow the Velero setup instructions for your storage provider.

Once you have Velero setup on your cluster you can orchestrate the backup and restore from the Velero CLI.

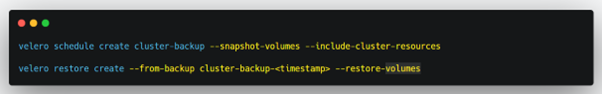

Backup and Restore Velero Command Examples

The following sample commands can be used to create a scheduled backup and restore of your applications.

Velero is more difficult to manage as organizations grow. Using Velero for a single cluster backup is easy; however when you have multiple clusters in your environment, you will have to independently run the backup on each cluster or use an orchestration tool for that. Backup scripts will have to be created and executed to avoid the manual effort which can be tedious. It also lacks a Graphical User Interface (GUI) and so managing multiple clusters under one screen is tough. Being an open source tool you cannot expect a quick response from support for query resolution or issues faced. Velero tool requires investment in learning, experimenting and gaining some experience to better use it.

3) Vendor Specific Kubernetes Data Protection Solutions

There are many enterprise Kubernetes data protection solutions available today. The right Kubernetes data protection solution should be granular to the container level, should be aware of Kubernetes as well as its abstractions like namespaces and the underlying storage. It also must provide application-aware and application-consistent backups without risk of data corruption. RPO and RTO needs to be stricter since applications need to constantly be up and running hence backup of the applications along with all the Kubernetes cluster configuration is important.

To name a few vendors in market who have features which allows users to own Kubernetes data protection and disaster recovery (DR) policies are CloudCasa, Kasten K10, Portworx PX-Backup and Trilio. If you are relying on Cloud Managed Engines such as EKS, AKS or GKE for hosting your Kubernetes clusters, it may make complete sense to choose a fully managed backup service such as CloudCasa.

Make the Right Move!

An organization must evaluate Kubernetes backup tools and solutions based on their unique needs and requirements. Here are several considerations to help with your planning:

- Requirements – RPO, RTO, airgap, immutability, self-managed or SaaS

- Compatibility – PV types (CSI, non-CSI, NFS, etc.), cloud provider and distribution

- Product features – multi-cloud and multi-cluster management, granular recovery, API automation, reporting and alerting

- Security and compliance – RBAC, encryption, MFA, suspicious activity detection, private registries and endpoints, etc.

- Application awareness – database support via databases hooks, that are prebuilt or custom built

- Trial period – time or resource limited

- Cost and licensing – capacity or worker node or pod/app based

- Support access – phone, email, chat, remote sessions, knowledge base, and SLA

Kubernetes clusters span across different environments or cloud providers. Ensuring that these components are backed up periodically is the primary goal of any Kubernetes data protection solution. Since backups need to be performed regularly, automating the process is essential. Using multiple tools for different needs of backup seems easy but when disaster strikes co-ordinating with these tools and getting the Kubernetes cluster up to a single point in time will be extremely challenging. Along with the ability to backup and restore the solutions needs to have good backup execution monitoring capability.

As a Kubernetes backup-as-a-service solution, CloudCasa removes the complexity of managing traditional backup infrastructure and it provides enterprise-level of Kubernetes data protection. IT operations need not be Kubernetes experts and DevOps doesn’t need to be storage and database protection experts to backup and recover your Kubernetes clusters and even your cloud databases. And cyber-resilience and ransomware protection is built it.

You can try the free service plan for CloudCasa, no strings attached. We’d be happy to connect with you over a call and talk through your Kubernetes data protection challenges. If you want a custom demonstration or just have a chat with us, get in contact via casa@cloudcasa.io.